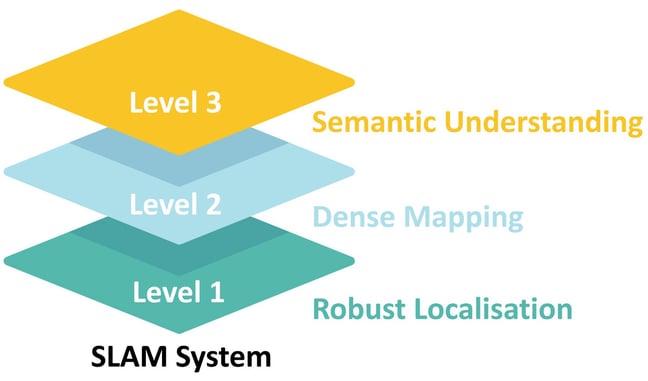

As a team of specialists with a history of some of the most important innovations in SLAM, we have a long-term view of where the technology is going. We see SLAM as nothing less than a crucial enabling layer for next-generation spatial computing and general embodied AI, and believe that we are still only near to the beginning of its full development. But on the way, we can identify broad levels of cumulative competence, each of which is already very valuable for applications:

Level 1 — Robust localisation

Level 2 — Dense scene mapping

Level 3 — Map-integrated semantic understanding

Most SLAM solutions which have already appeared in products have focused on Level 1. In any SLAM system, localisation and mapping must be carried out together, but when the main goal output is localisation, the map can consist of a sparse set of points or other simple landmark features. These are sufficient to determine a moving device’s pose while not providing detailed information about the surrounding scene. The positions of the landmarks are jointly probabilistically estimated with that of the device via a sequential filter or non-linear optimisation. MonoSLAM and PTAM are pioneering examples of these two estimation approaches in monocular visual SLAM. OKVIS extends this approach to fuse information from multiple sensors including additional cameras and inertial measurement units.

Localisation allows tracking of the pose of a VR headset, or positioning and path following for a robot or drone. This is often useful in itself or can be combined with simple scene recognition, such as estimating the main planes in a scene by fitting them to landmark points.

At Level 2, dense scene mapping can augment or replace sparse mapping. Dense mapping means that the SLAM system aims to estimate the shape of every surface in a scene. This permits obstacle avoidance and free space planning for robots, or augmented reality systems which can attach virtual objects to arbitrary surfaces. Dense maps can be represented in several different ways, including meshes, surfels or volumetric implicit functions. If there is still a sparse landmark feature map in the background, then the dense map is defined relative to this framework and would not usually be used for localisation. In a pure dense SLAM method (such as DTAM), the dense map itself is the basis for localisation via whole image alignment. In either approach, large scale, loop-closure capable dense SLAM is still a challenging problem.

SLAM will continue to evolve towards Level 3 of integrated real-time geometric and semantic understanding of the scene around a device, and finally fully meaningful mapping at the level of specific known objects in known poses. In robotics, this will enable intelligent long-term scene interaction: robots which know what is where in a scene in a general way. In AR, it will enable smart automatic scene annotation, memory augmentation and telepresence. The route to object level SLAM is the subject of much ongoing research, and deep learning will surely play a crucial role — — either in semantic labelling of dense scene maps, or in direct object recognition and pose estimation. The crucial issue is how to combine these capabilities with geometrical estimation in a `SLAM’ manner which enables scalable, real-time, consistent semantic mapping.

Much research and development is needed to achieve these general capabilities, and they cannot yet be fully achieved even in research labs. When the constraints on price, power and size imposed by real products are taken into account, the challenge is even greater. Even at Level 1, there is much to improve in the robust localisation performance of current SLAM systems, which have particular trouble in situations with very rapid motion, large lighting variations or texture-poor scenes.

High performance SLAM is needed for a range of applications whose breadth is only just starting to be realised. Beyond VR, AR, wearable devices and autonomous driving, there are numerous applications in areas including drones, industrial automation and personal robotics. Robust, Level 1 SLAM is the foundation on which all future solutions will be built and SLAMcore we will offering such a system soon. As we build our technology, we believe that SLAMcore’s focus on sensor fusion and the use of novel camera technologies such as the Event Camera will produce a big leap forward in the robustness of SLAM enabled products and pave the way for truly robust, Level 3 SLAM.